A Generative System For Creative Expression in Human-AI Collaboration

This thesis investigates the shifting boundaries of art in the era of Generative AI, critically examining the essence of art and the legitimacy of AI-generated works. Despite significant advancements in the quality and accessibility of art through generative AI, such creations frequently encounter skepticism regarding their status as authentic art. To address this skepticism, the study explores the role of creative agency in various generative AI workflows and introduces a "human-in-the-loop" system tailored for image generation models like Stable Diffusion.

The Latent Auto-recursive Composition Engine (LACE) aims to deepen the artist's engagement and understanding of the creative process. LACE integrates Photoshop and ControlNet with Stable Diffusion to improve transparency and control. This approach not only broadens the scope of computational creativity but also enhances artists' ownership of AI-generated art, bridging the divide between AI-driven and traditional human artistry in the digital landscape.

Background

The Essence of Art

The ability of generative AI to create art has sparked scholarly debate for decades. While visually impressive, AI-generated art often lacks contextual meaning and innovative structure. The art community frequently views these creations as mere imitations of human effort, learned statistically from existing works. Assessing AI-generated art is challenging due to the subjective nature of art evaluation and the absence of universal standards for artistic quality.

To advance this discussion, we must clearly define what constitutes art. This definition will help us develop a framework to assess the artistic merit of computer-generated works and explore the complexities of computational creativity.

What Makes an Art?

Discussions surrounding the definition of art have produced many theoretical frameworks, yet no theory has successfully encompassed all aspects of art. Philosophers such as Morris Weitz have even challenged the pursuit of defining art's essence, arguing that art is an inherently "open concept."

However, adopting a broader definition may be beneficial. George Dickie's perspective in "Defining Art" offers a foundational approach to identifying what qualifies as a work of art. Dickie differentiates between the generic concept of "art" and specific sub-concepts like novels, tragedies, or paintings. He suggests that while these sub-concepts may not have the necessary and sufficient conditions for definition, the overarching category of "art" can be defined.

According to Dickie, two key elements are essential: a) artifactuality—being an artifact created by humans; and b) the conferring of status—where a society or subgroup thereof has recognized the item as a candidate for appreciation.

This framework seeks to avoid the pitfalls of traditional art definitions, which often implicitly include notions of "good art," which are overly restrictive or depend on metaphysical assumptions. Instead, his definition aims to reflect the actual social practices within the art world.

Building on this legacy, this thesis will explore artifactuality through computational methods in the context of generative AI, focusing on one of the foundational elements that might define what can be considered art in the digital age.

Can Humans Distinguish Between Human and Machine-Made Art?

Addressing George Dickie's concept of the "conferring of status," a pivotal inquiry arises: can people discern between artworks created by humans and those generated by machines, especially when the quality of machine-made art rivals that of human creation?

A 2024 study by Kazimierz Rajnerowicz reveals a growing difficulty in distinguishing between AI-generated and human-created images, with up to 87% of participants unable to make accurate identifications. This difficulty persists even among those with AI knowledge. Rajnerowicz's article examines how individuals judge the authenticity of images, the potential risks of failing to recognize AI-generated content, and underscores the necessity of understanding AI advancements to prevent deception by deepfakes and other sophisticated AI techniques.

Lucas Bellaiche et al. delves into the perception and contextual meaning between humans and AI-generated art. Their study indicates that people tend to perceive art as reflecting a human-specific experience, though creator labels seem to mediate the ability to derive deeper evaluations from art. Thus, creative products like art may be achieved—according to human raters—by non-human AI models, but only to a limited extent that still protects a valued anthropocentrism.

However, a study by Demmer and colleagues titled "Does an emotional connection to art really require a human artist?" uncovered compelling evidence indicating that participants experienced emotions and attributed intentions to artworks, independent of whether they believed the pieces were created by humans or computers. This finding challenges the assumption that AI-generated art is incapable of evoking emotional and intentional human elements, as participants consistently reported emotional responses even towards computer-generated images.

Nonetheless, the origin of the artwork did have an impact, with creations by human artists eliciting stronger reactions and viewers often recognizing the intended emotions by the human artists, suggesting a nuanced perception influenced by the actual provenance of the art.

Why do people think AI generative artwork is “artificial”?

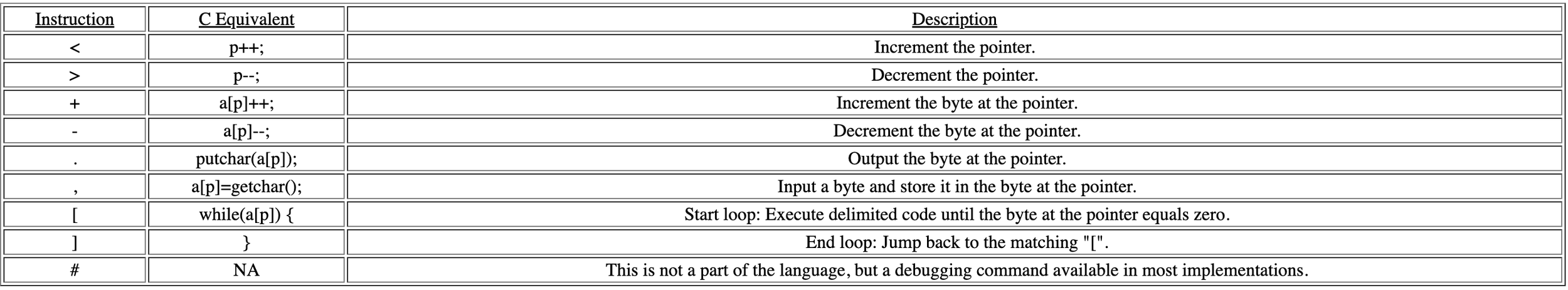

Generative AI empowers artists to manipulate the latent space with ease, creating new artworks through simple prompt modifications. Advanced techniques and tools such as LoRa, ControlNet, and image inpainting provide even greater control over the generative output. Despite these capabilities, there is a prevalent bias among observers who view computer-generated art as "artificial." This perception stems from several factors:

Lack of Human Touch: AI-generated art lacks a direct human creative process, leading to views of it being less genuine or lacking soul.

Reproducibility: The ability of AI to rapidly produce multiple, similar outputs may reduce the perceived value and uniqueness of each artwork.

Transparency and Understanding: The opaque decision-making process of AI systems often results in doubts about the creativity involved.

Missing Context: AI does not fully understand or express the social, cultural, or political nuances that deepen traditional art, often making its products seem technically proficient yet shallow.

Creative Process and Iterative Intent

In his 1964 work, "The Artworld," Arthur Danto emphasizes that art relies on an "artworld" consisting of theories, history, and conventions that recognize it as art rather than mere objects. This notion highlights a pivotal idea: in modern art, the creative process might be more important than the actual artwork. Without the artworld's narratives and contexts, the audience may struggle to grasp the artwork, as it is not guaranteed to deliver the same experience, potentially widening the gap between art perception and concept. This gap further expands in AI-generated art due to the opaque nature of AI models, which obscure the creative process and question the legitimacy of the artwork.

Artists often find themselves unable to articulate the relationship between their input (text prompts) and the machine-generated output, reducing their sense of ownership over the work. Despite potentially high-quality results, artists might not view these outputs as their own creations, leading to a perception of AI models as the true authors.

Moreover, the iterative nature of creative intent presents further challenges. Artists typically do not start with a clear vision; instead, they develop and refine their goals through the creative process, an approach fundamental in fields such as design, architecture, or illustration, where concepts often evolve through iterative experimentation. For instance, in architectural design, a technique known as "Generative drawing" plays a critical role. Described in "Generative Processes: Thick Drawing" by Karl Wallick, this method involves using drawings not just as tools for documentation but as active participants in the design process. These drawings help conceptualize ideas while integrating both abstract thought and practical execution into a single visual narrative, maintaining visibility of the design process to enhance creative exploration.

This contrasts sharply with the requirements of most generative models, which necessitate a well-defined intent from users, usually articulated through precise text prompts. This rigid structure creates a significant disconnect: if an artist’s intent shifts during the creative process—a common occurrence—the output from the generative model may no longer align with their evolving vision, rendering the quality of the result irrelevant.

Charting artistic authenticity: A new metric for assessing the authenticity of artwork by mapping agency on an additional axis. Here, the spectrum of agency spans from fully autonomous AI-generated art to human-driven creation, offering a nuanced view of artistic origination.

Sense of Agency

Agency in generative artwork refers to the capacity of the creator to make independent decisions that significantly affect the outcome of the art. In traditional art, agency is clear-cut; artists consciously choose every detail of their work, from the medium to the message. However, in AI-generated art, the concept of agency is more nuanced. The human operator provides text prompts or images, and the AI then processes these inputs based on its training data. Many artists contend that presenting the raw output of AI as one's own work amounts to theft and a lack of originality since these outputs are built on the contributions of other artists' styles, often utilized without consent concerning copyright and creative thought. Therefore, understanding the agency's complexities is crucial in human-AI collaborations, affecting the quality, ownership, and impact on computational creativity.

The Importance of Agency

In HCI, studies on the Sense of Agency (SoA), like Wegner et al.'s "Vicarious agency: experiencing control over the movements of others," are prevalent in measuring one's perception of the cause-and-effect on an event or object, and thereby determine whether the person has ownership over it. However, these studies primarily focus on the augmentation of body, action, or outcome rather than internal states like creativity aided by machines. Yet in art, particularly AI-enhanced creation, agency's significance extends to a proposed axis that gauges the artwork's authenticity.

AI art creation traditionally orbits two axes: the architecture grounding the model in training data, and the latent navigator that seeks the desired image from input prompts. This process epitomizes the creative journey in AI art, where the user steers the pre-trained model with tools to match their artistic vision.

Introducing the agency axis reframes the artistic origin narrative. At one extreme, complete human intervention equates to a work that is entirely the artist's intellectual property, where every creative facet is handpicked. On the other hand, excessive reliance on AI for composition and style risks depersonalizing the art, prompting the art community's devaluation of such works.

An optimal creative balance is struck when both human and AI inputs interact, fostering a space where creative spontaneity meets deliberate artistry, and ownership over the end product is clear. This intersection becomes a breeding ground for creative discovery, marrying human intention with AI's potential.

Intentional Binding and Human-AI Interaction

A fundamental aspect of human cognition that illuminates our interaction with AI in creative processes is intentional binding. This phenomenon, where individuals perceive a shorter time interval between a voluntary action and its sensory consequence, highlights how the perception of agency influences our engagement with the world. In the context of HCI, especially in AI-enhanced art, understanding intentional binding provides valuable insights into how artists perceive and integrate AI responses into their creative expression.

When artists interact with AI, the immediacy and relevance of the AI's output to the artist's input can affect their sense of control and creative ownership. If the output closely and quickly matches the artist's intention, similar to the effects observed in intentional binding, the artist may experience a greater sense of agency. This heightened perception of control can make AI tools feel more like an extension of the artist’s own creative mind rather than an external agent imposing its own logic.

Therefore, in discussions about agency in AI-generated art, it is crucial to consider how the principles of intentional binding might play a role in shaping the artist's experience of the creative process. This understanding can guide the development of more intuitive AI systems that enhance the artist's agency, promoting a more seamless and satisfying creative partnership.

The Technology of Image Synthesis

The art community has experienced profound transformations over the years, significantly influenced by advancements in artificial intelligence and machine learning. The evolution of generative models has been pivotal in shaping both the capabilities and applications of generative art. In this section, we will explore the history of technology in art.

The Evolution in Generative Models

The development of generative models in image synthesis and artistic creation has seen remarkable transformations since the mid-20th century. Initially, in the 1950s and 1960s, pioneering artists utilized oscilloscopes and analog machines to produce visual art directly derived from mathematical formulas. With the advent of more widely accessible computing technologies in the 1970s and 1980s, artists such as Harold Cohen began to employ these tools to create algorithmic art. Cohen's work with AARON, for instance, involved using programmed instructions to dictate the form and structure of artistic outputs. During the 1980s, the emergence of fractal art marked a significant advancement, employing mathematical visualizations to craft complex and detailed patterns. The 1990s introduced evolutionary art and interactive genetic algorithms, which further democratized the creative process by allowing both artists and viewers to participate in the evolution of artworks.

The resurgence of neural networks in the 2000s, fueled by advancements in GPU computing power and the availability of large datasets, significantly propelled technological innovation. Prominent developments such as Convolutional Neural Networks (CNNs), Variational Autoencoders (VAEs), and Generative Adversarial Networks (GANs) transformed the landscape of generative art. These models revolutionized the field by generating highly realistic images that closely mimic the characteristics of their training data.

Recent advancements, such as Vision Transformers (ViTs), Latent Diffusion Models (LDMs), and Mixture of Experts (MoE) models like RAPHAEL, have pushed the boundaries by enabling the synthesis of complex images from detailed text prompts, facilitated by Contrastive Language-Image Pretraining (CLIP). These innovations merge artistic expression with cutting-edge technology and challenge traditional notions of creativity and the artist's role.

These developments highlight a progression from relatively simple predictive models to sophisticated systems capable of understanding and generating complex visual content, demonstrating that models can encode art concepts into embeddings and later reconstruct or synthesize them for new artistic purposes.

The limitation of Text-to-Image Models

Text prompts serve as a versatile and universally accessible method to guide the generation of images. As large language models evolve, text-to-image models are increasingly capable of interpreting both literal and semantic meanings of text prompts. Nevertheless, these models often face challenges in accurately rendering complex or abstract concepts based solely on textual descriptions. This misalignment between the generated content and its intended semantics presents several issues that need addressing.

Misalignment of Text Encoding

Wu et al. (2023) explore the disentanglement capabilities of stable diffusion models, demonstrating that these models can effectively differentiate between various image attributes. This disentanglement is facilitated by adjusting input text embeddings from neutral to style-specific descriptions during the later stages of the denoising process.

For instance, as illustrated in Figure 1 (Wu et al. 2022), the prompt "A photo of a woman" might yield significantly different results from "A photo of a woman with a smile." Although modifying text embeddings can help segregate different attributes, this approach struggles with fine, localized edits and may be overwhelmed by overly detailed neutral descriptions.

Moreover, dependency on prompt engineering often encounters inherent limitations as the model's semantic interpretation can significantly diverge from human understanding. Such dependence is typically restricted by the labels in the training dataset. For instance, using specialized terminology like "monogram" to describe a straightforward "black and white" graphic may lead to unforeseen results, largely due to the annotators' limited domain-specific knowledge.

Despite potential improvements in semantic understanding and better alignment of text embeddings with image and art concepts as large image generation models become more complex and larger, users may still face difficulties in articulating abstract concepts through text. The main challenge arises from the discrepancy between how datasets are annotated and how users describe their desired outcomes using the same vocabulary.

Download the full research thesis in PDF

Github RP: https://github.com/iamkaikai/LACE